Introduction

Motivation

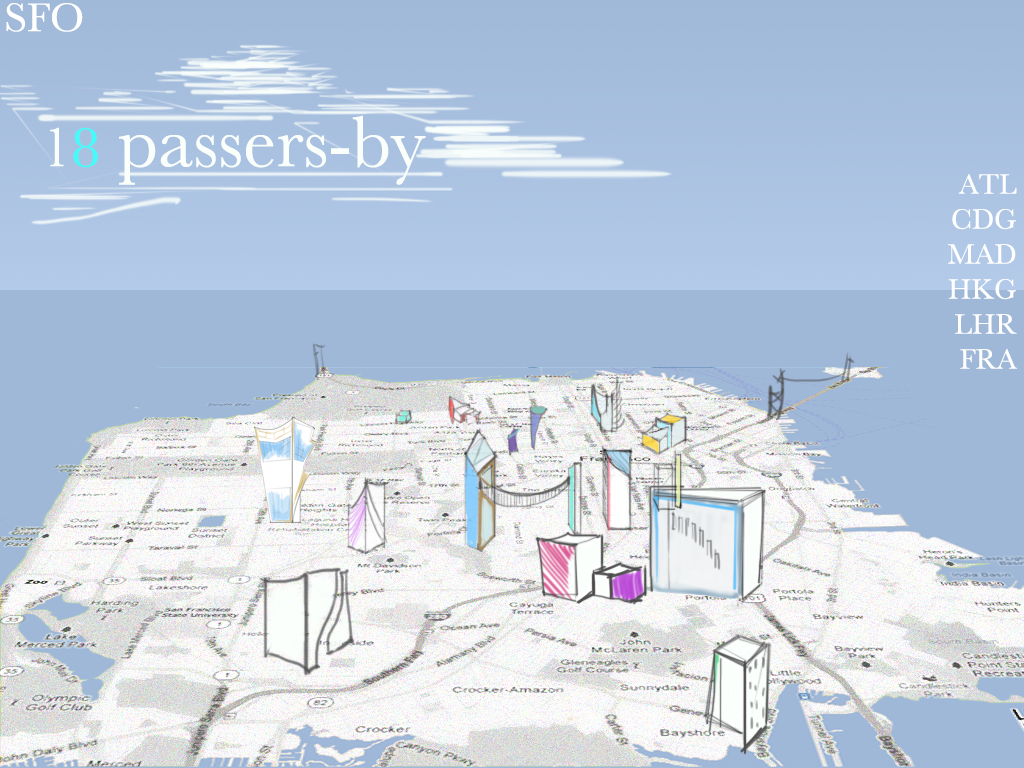

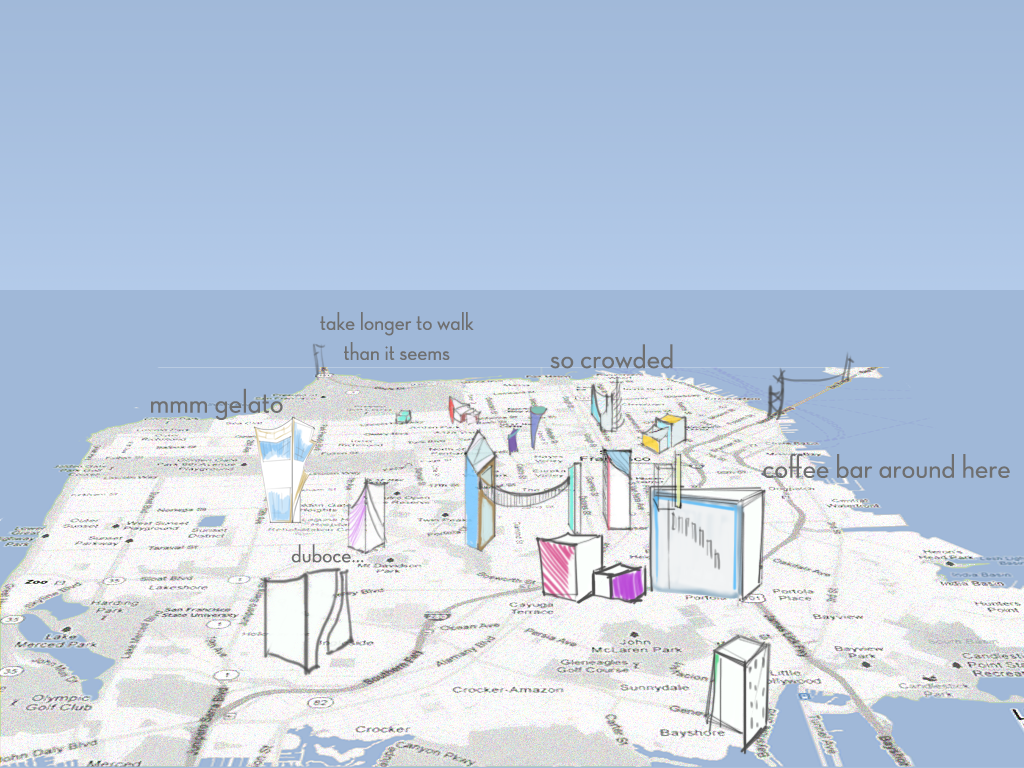

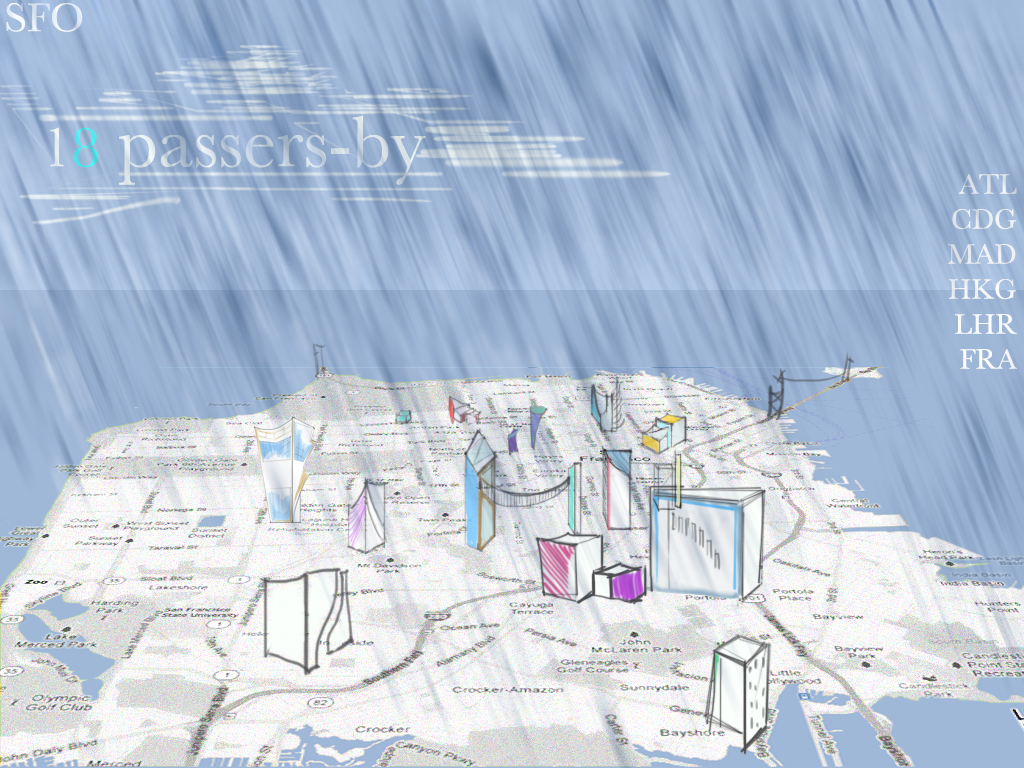

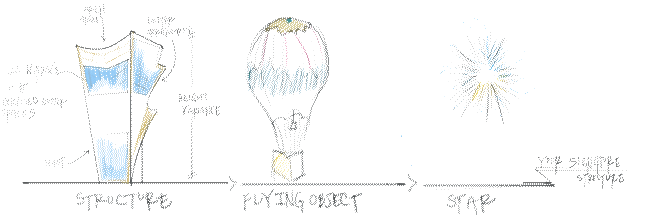

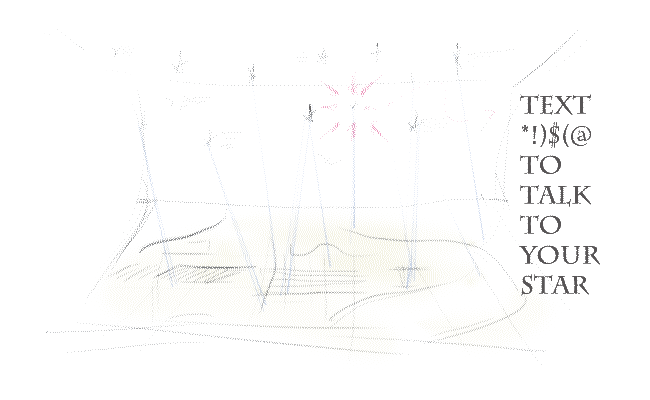

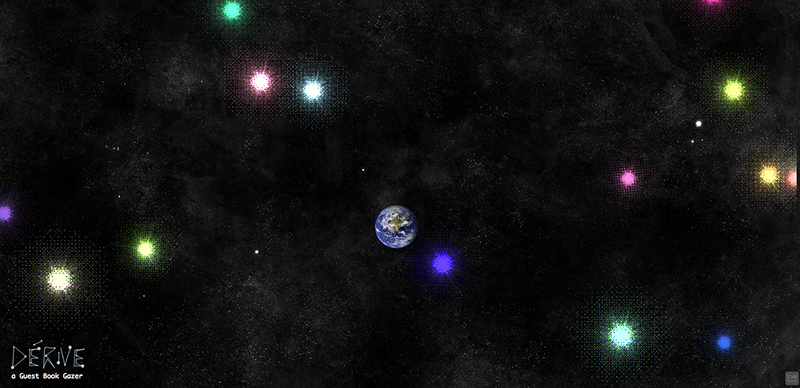

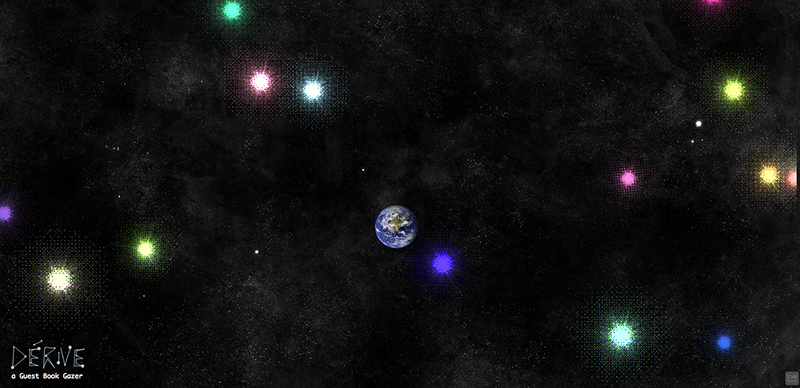

Our project is motivated by our impetus to emphasize the forgotten experience of "encounters," unplanned journeys, random walks and to address the need of travelers to express presence while maintaining anonymity. This question the supposition that itinerants or visitors lack ambient awareness of how others live in the current environment and that filling this need suffices to encourage exploration by diminishing action biases of exploring new places (by introducing opportunity for spontaneous decision making). The project chooses to do so using the tradition of guestbooks to preserve history of a place. From this we are left to build on their understanding of guestbooks and extend its capabilities. In short dérive is a re-imagining of the guestbook which tries to tie exploratory commentary "in time" rather than "after the fact." We realized some of the exciting tradition of guestbooks is the allowance to peruse other travelers’ comments, without their cognizance. Taking this tradition, of leaving a statement, we translated this idea to being able to "text" (webApp) to your digital signature (star), while you are exploring the city, letting those at the source of the projection see travelers comments -- to begin their own journey.

Hypotheses

From this motivation and mulling we can extract this subset of hypotheses:

- Users* gain salience on local vs. global distribution of those around them, who (if sampling is maintained) are also travelers.

- In question: the effect of this knowledge.

- Users* are excited by the digital guestbook with intuitive gestural interactions and engaging visuals. They love the idea of guestbook but feel the traditional guestbooks are fairly obsolete.

- Users* are attached to the idea of their star being present in this visual/digital system.

- In question: do the expect their star to stay? Would they ever return? Would they want to visit their representation from the remote web-app? See the comment history?

- Users* more often than not want to explore and follow up on comments they find compelling, especially if it is related to day planning.

- Users* will need some impetus to really comment through the app after they leave, some reminder.

- Key question on extended usage: the desire to leave more than one comment if any after leaving!

Driving Questions

- Can we encourage spontaneous exploration in new contexts, where otherwise less present?

- Will users understand the basis of how to use a digital guestbook?

- Will users "sign" the guestbook while exploring the city?

- How do visitors interpret the ambient comments?

- What role do guestbooks play and how has it changed with the real-time remote feed?

- How far can we extend the notion of abstracted presence (in our case signatures)?

- What is the threshold of private and public in these settings?

- How engaging will users find about interacting with the guestbook using gestures?

Methods

Critical Tasks

- Signature signing

- Input origin and destination

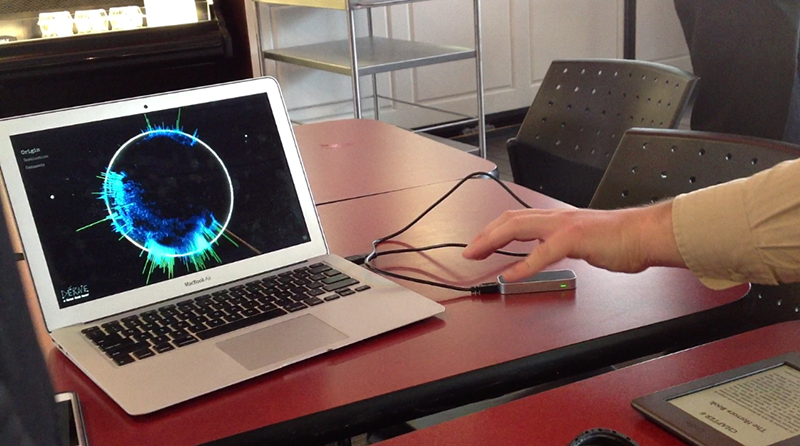

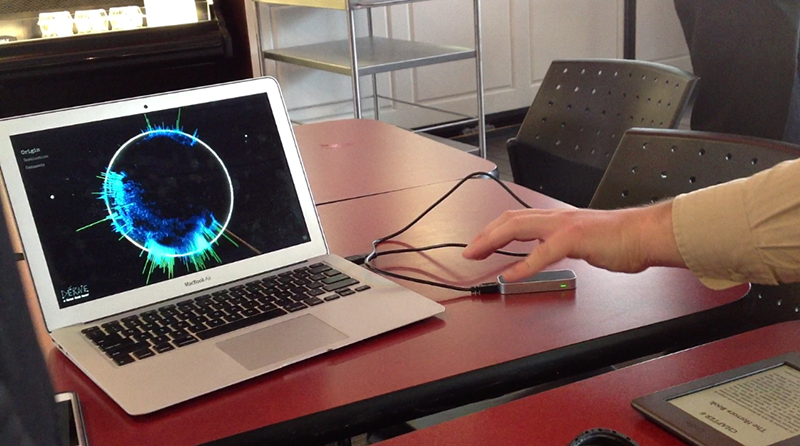

- Switch between star view and globe view

- Explore each view using gestures (including toggling between origin, destination and comments modes in globe view)

- Connect to star through web app for remote use on cellular device

Data

- Length of interactivity

- Time spent exploring on star view

- Time spent exploring globe view

- Types of unspecified gesture use

- Connect to star through web app for remote use on cellular device

- Observed breakdowns or confusions

- Interview notes on their feelings and emotions about the experience

User Recruitment**

We plan to a/b test on other HCI students and non-cs** friends before real visitor impromptus.

Then we will go to Stanford visitor center to recruit random campus tourists by setting up at the visitor center and occupying their waiting time.

Results

During Mar. 7th and Mar. 8th, we have done user testing with 10 representive users.

User 1 (Psych Major)

- Expressed nervousness and feelings that she was 'doing it wrong' due to inaccurate/insensitive feedback on screen from the Leap

- Expected zoom to be triggered by a spreading-fingers gesture, or a pinch-and-pull gesture with two hands.

- Wanted pan (or spin in globe view) to be triggered either by one finger swipe or whole hand swipe in desired direction.

- Also intuitively wanted to "grab" the globe with a claw-hand motion and rotate it that way (kind of like a joystick)

- Would not miss the zoom on the globe view if it did not exist

- Thought that zooming in on the earth to switch to globe view might be better than having an explicit gesture.

- Thought that perhaps we should have a terrain map globe if we only had a few colors for the data points. (This is also more coherent with the earth image in the star view. In this case, perhaps we should also use a terrain map for the location chooser - that should maybe be A/B tested as well since for that particular purpose of choosing location, the black and white map might be less distracting, but again the terrain map would match the rest of the app better).

User 2 (Bio Major)

- Suggested that the signature have acceleration detection like mouse acceleration- greater acceleration would map to moving greater distances across the screen rather than just a 1-to-1 mapping. Said it might feel more natural.

- Played around a lot more, did not care so much that the data was accurate - he was mainly just curious what it could do/what he could make it do/what would happen if he tried such-and-such motion.

- Would miss the zoom in globe view if it did not exist

- Wanted to be able to spin the globe with hand swipe

- Tried "holding" globe between two hands and rotating

- Tried using flat hand palm down as a plane of rotation

- Idea for gesture to return to star view was a clap - bringing both hands together, kind of like closing a book or squishing the earth

- Thought that it would be nice if everything rotated around the earth so the user would never have to navigate beyond the earth but could just "spin" the stars around the earth so the ones in back move to the front. (We have considered this, but the graphical calculations are complicated, could not get it to render as desired when we tried it. May think about trying again if there is time. This sort of layout would allow for more parallel motion between star view and globe view - both would have a spinning sort of navigation)

User 3 (Visitor Center Desk Assistant, CEE Masters)

- Tries to use a degree of angle space much more than the leap can detect.

- Does not realize/recognize/learn the mapping of gesture to screen-- that little movement can make a big dip in canvas.

- In signature mode user actually pinches to write their name, somewhat similar to holding a pencil, rather than thinking of their hand as a pencil.

- About a minute and fifteen seconds of interaction time, before high tension sits in and they give up.

- Tendency to touch the sensor when it doesn't work and at the very beginning.

- Got stressed when the phone rang.

User 4 (Astronomy/SLAC Phd)

- Fearful to use and be observed using.

- Seemed skeptical

- Was unclear on their angle of use for input.

- Needed very clear gestural instructions.

User 5 (New Student Visiting from NY with Mother)

- [In this study we didn't give "what the app can do" but asked "what do you want to do" at each stage, we found that we supported their wants!]

- Wanted to see Earth. (we then toggle to globe view)

- Want to see entire signature.

- Too close to leap most of the time (directed by mother to pull back)

- Much younger (high school) not familiar with being user studied or this tech.

- User ask whether they can talk to other people (we associate our comments mechanism as fulfilling this need).

- User has trouble finding cursor to place themselves.

User 6 (Businesswoman from San Francisco)

- [Most skeptical user]

- Talked a lot about her behavior at airports, about eyeballing other people and whether or not she can speak to them.

- Accessing whether other people are bored too.

- Wants to meet up with other people she doesn't know to kill time in the airport.

- Large international traveler yearns to talk to people.

- Friends around user, give user a hard time.

User 7 (Director of Architectural Design Department)

- 3D more exciting to those that work with 3D in their profession/studies.

- Learning curve steep but fast.

- Likes the guestbook idea.

User 8 (EE Freshman, Tech Geek)

- Played with the Leap demo a bit before testing.

- Signed very smoothly and commented that this is very sleek, wished he could see the entire signature

- Had trouble moving the cursor around the map, but understood the instruction, successfully confirmed with putting another hand

- But when inputting the destination, there was a false positive

- When seeing the star, he naturally started to explore possible gestures to navigate through the space and quickly figured out how to pan or zoom.

- Succeeds in transitioning to globe view with the two-hand gesture, still he quickly learned how to play with the globe and concluded that there was momentum in the globe's movement, that it's easier to control the globe with two hands.

- He would like to inspect a specific region on the globe, but with the current gesture control it's not easy to do that.

- He suggested it would be better if it's less sensitive.

- He commented he would definitely play with it when he's traveling as it's cool to look at and fun to interact with.

User 9 (Entrepreur at Bytes Cafe from San Diego)

- When signing, firstly he moved his finger very slowly and then he got the sense of how this worked and started to sign smoothly.

- Questioned the definition of origin, asking whether it means place of birth or just the place he's flying from. He suggested the latter definition would make more sense in airports.

- Had trouble moving the cursor across the map, the confirmation gesture didn't work.

- Liked the concept of leaving marks in places he has been.

- When navigating in the star view, he naturally moved his hand up and down but nothing happened. He didn't figure out that he could use two fingers back and forth to zoom in/out after I told him so. He discovered himself how to pan left and right.

- Would like to zoom closer to his star and other people's stars.

- In the globe view, he commented that the visualizations, specifically the spikes on the globe are very creative and interesting.

- Wished to make more sense from the data presented, such as some information about the people who contributed to one spike, what are the names of some isolated islands (fewer people have identified them as origins or destinations).

- Wished that there could be some way to reveal people's purposes of being here, such as vacation, conference, even events like SXSW.

- He thought the comments are too open-ended that maybe people don't know what comments to leave.

User 10 (CS Phd from South Africa)

- When signing, commented that he had no idea about how large is the sensing area. Suggested there could be a calibrating procedure, where user can touch the four corners of the screen to get a sense of the area.

- Commented that moving the cursor on the map using leap is very frustrating. He can't get the cursor to his origin, South Africa.

- When navigating in the star view, he suggested that when the viewpoint is further away, moving slightly should trigger larger movements, while the viewpoint is closer, moving slightly should trigger smaller movements.

- Gesture to transition to the globe view didn't work out.

- Didn't see the connection between the star view and the globe view.

- First he naturally used two hands to control the globe.

- Commented the visuals of the globe are very impressive but the gestures are frustrating as the globe didn't behave the way he expected.

- He had trouble getting the South Africa to face to him.

General Conclusion

- Users thought graphics were cool, thought Leap control would be cool if it were smoother and more accurate

- Zoom was triggered too easily, especially in globe view

- Users understood the "put in another hand to confirm" very quickly. It was interesting to note that the put the second hand in in the same pointing position as the first. But this gesture didn't work most of the time.

- Users wanted the signature to stay on-screen. One user said otherwise she wasn't sure it was actually registering anything, or if it was just showing her where she was on the screen.

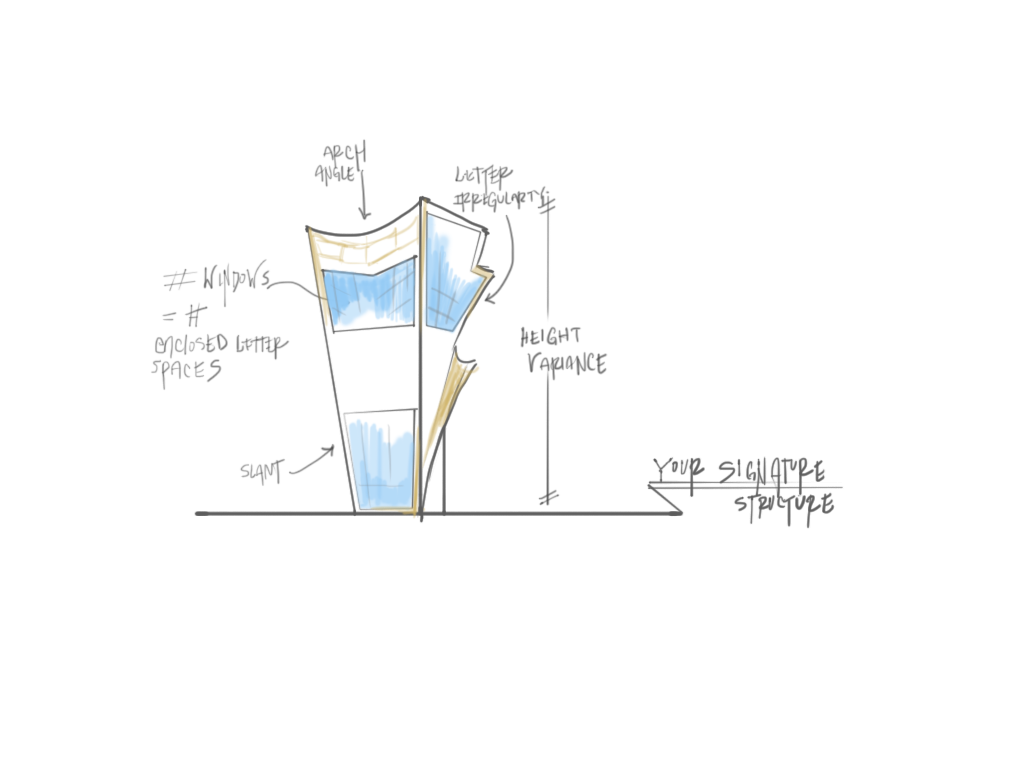

- Users need to feel connected to their star - why is it "theirs"? How does its generation have anything to do with them (their signature).

- Users asked what they were supposed to sign. We had supposed that signing a guestbook was familiar enough that they would assume their names, but perhaps we need more explicit instructions (Vicky's idea: to have a star swoop through the signature space and write "sign" or "signature" in a .gif before the user starts signing. This could also replace the countdown currently in place and still give users that buffer time to prepare)

- Users approved of older stars being farther back.

- Users thought the comment system would be cool, wanted to see that implemented.

- Many of the gestures users performed were in fact very close to what we had intended, but they gave up on them and continued trying different things because they weren't perfectly oriented so the Leap didn't pick up their intended motion accurately. It is difficult to teach users ambiently to use gestures because the gestures are best performed when you know the limits of the Leap and that it is looking for fingertips. Users used the whole hand motion, for example, to pan in star view, but it resulted in a jerky motion that didn't map to their entire motion because they had their hand oriented slightly diagonally and fingers were occluding each other as they moved.

Discussion

One thing that we tested in this round of studies was the kind of instruction or lack thereof a user needs to learn the set of gestures. We recognize the new-ness of the input style and decided

to first see how users react in the "discovery" process. When verbally instructed on gestures we recognize

for a certain scene they used their "understanding" of what that mapped to. We saw the most variety in

interpretation when we asked users to "place" themselves on a map. In this scene they are asked to place a

second hand to "lock" their choice. Where they place their hand would cause it not to work 60% of the time (6/10 users).

When user got tired of not getting the gesture correct we would use our nifty keyboard shortcuts to bypass the "scene/stage/step" in the app.

Our system did not have any gesture instructions (we do have animated gifs for this) per my first comment as a result the testing process was much longer. From this we discovered that users regardless of instruction just start making things up when it doesn't respond quick enough.

We also did not add the webapp/comment layer, though part of it is implemented. We discovered that without this layer users wanted it.

Implications

From interaction time, and flippancy to wave the gestures as completely not working when they don't respond, we determine that the system

really has to be more robust to catch up with their learning curve. This curve was pointed out to us as being steep but quick.

The tendency of not knowing their angle of interaction or "pick-up" with the leap suggests to us a need to point out the space they can move their hand in. Does this mean we put a glass box around the leap to a certain height? Or we tape a rectangle on the back wall? Do we limit the amount of space their hand can move in front of the leap on the y-axis?

We also discovered that the frame of reference for the user is actually the leap as it sits facing up to them, since they expect this to be the mapping, we are considering changing the gesture zoom to be moving the hand up and down rather than backwards and forwards.

The users tended to keep writing their signature. We had decided that for security reasons drawing the entire signature out needed to be just leaving traces. The users took this as "not registering" and kept writing, so the program wouldn't continue in a timely manner. This leads us to consider adding the persistent line drawing back.

Users consistently had issues finding themselves in the placing stage, and not just that, but had trouble getting the cursor to where they were from and were frustrated in settling for near. This leads us to consider changing the relative mapping of where their finger is to the context, and also doubling the size of the cursor.

We recognized situations where the user was not alone, that the backseat driver helped troubleshoot error in usage very quickly.

From our more advanced users (mostly from CS), we were queried as to how the star was related to the signature and how the stars mapped to the globe. These relationships were lost due to procedural animation complexity and timeouts in the js files the work between events. We hope to add in prototype two indicative stages the show how the stars go to globe view. and also illustrate how the signature is mapped to features of the star.